Let's talk about how to actually get things done with AI. Here are nine effective strategies for agent-driven development.

TLDR — Quick Takeaways

- Cross-reference architecture — Tell your agent where related projects live (

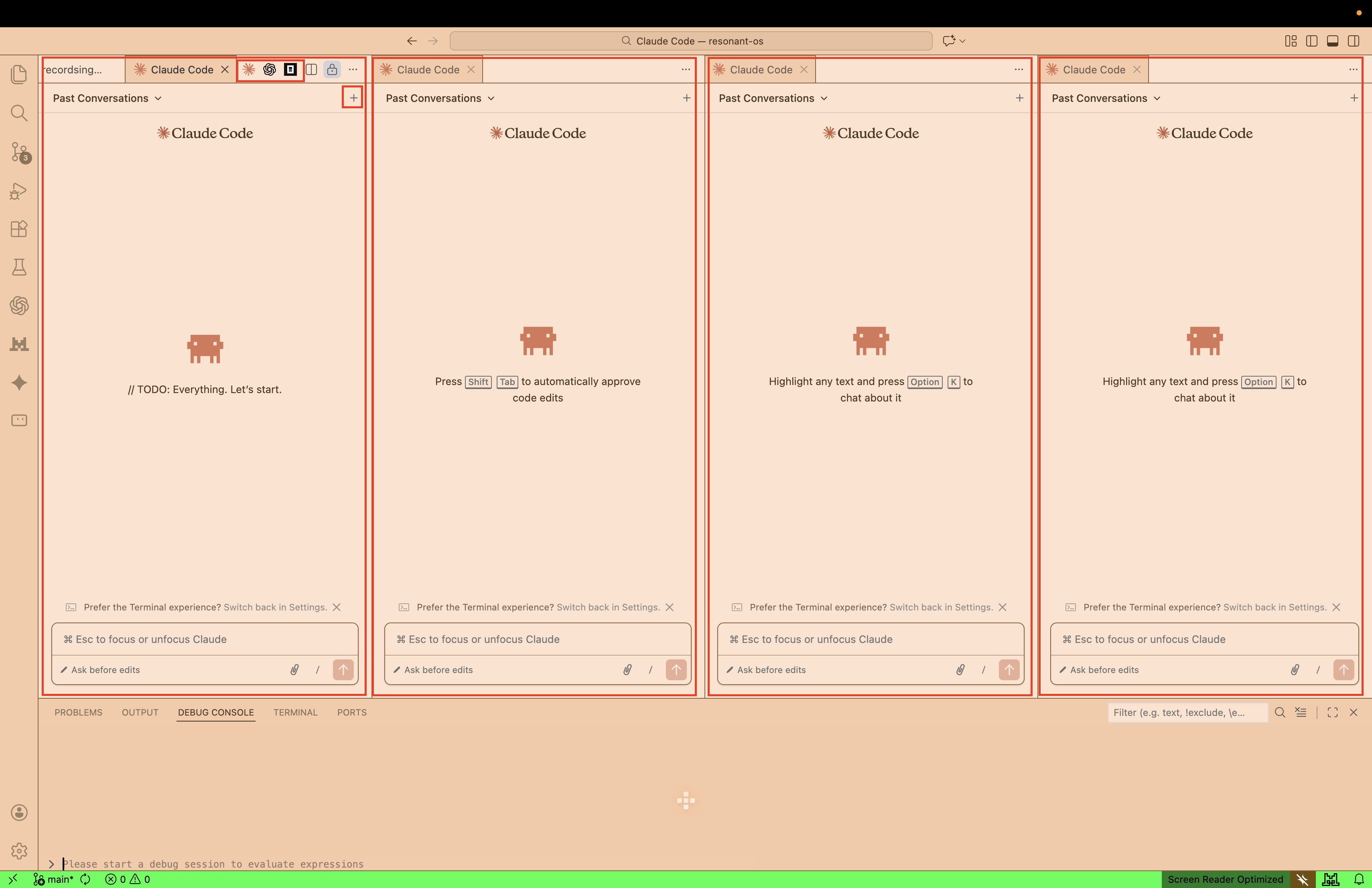

../app,../website) in your CLAUDE.md - Multi-panel workflow — Run 3-4 Claude Code agents in parallel, each focused on one problem

- Design persona — Give the agent a role + references (screenshots, code snippets) at project start, not end

- Isolate scope — Have the agent interview you to narrow scope before executing

- Spawn sub-agents — Keep an

agents/folder with domain-specific configs (ui.md, api.md, testing.md) - Rule of Threes — If an error happens 3x, stop retrying and log the fix to devlog.md

- Skills + docs loop — Use skills.sh, but a solid agents.md outperforms skills in evals

- Optimize for agents — Split big files, add NAV_MAP.md files, reduce context window spend

- Use popular tech — Stick to well-documented frameworks (Next.js, Rails) and force current docs with version numbers

1. Cross-Reference Your Architecture

┌─────────────────┐ ┌─────────────────┐

│ /app │ │ /website │

│ ───────── │ │ ───────── │

│ backend/ │◄───────►│ frontend/ │

│ api/ │ │ components/ │

│ .clauderc ─────┼────┐ │ .clauderc ─────┤

└─────────────────┘ │ └─────────────────┘

│

▼

┌─────────────────┐

│ Shared Context │

│ "I know where │

│ both live" │

└─────────────────┘When you're working on a project that has both an application and a website, you want to put them in a shared parent directory. The key here is to tell the agent where the other project lives—just add it to your CLAUDE.md or agents.md file. Something as simple as "the backend lives at ../app" or "the website is one directory up at ../website" is enough.

This shared awareness prevents hallucinations about file paths and keeps the context tight between your front end and back end. The agent won't guess or make up paths when it knows exactly where to look.

2. The Multi-Panel Workflow

┌─────────────────────────── VS Code ───────────────────────────┐

│ ┌──────────────────┬──────────────────┬──────────────────┐ │

│ │ Claude Code #1 │ Claude Code #2 │ Claude Code #3 │ │

│ │ ══════════════ │ ══════════════ │ ══════════════ │ │

│ │ Focus: UI │ Focus: API │ Focus: Auth │ │

│ │ │ │ │ │

│ │ > components/ │ > routes/ │ > auth/ │ │

│ │ > styles/ │ > middleware/ │ > sessions/ │ │

│ │ > hooks/ │ > handlers/ │ > tokens/ │ │

│ │ │ │ │ │

│ │ Context: 12k │ Context: 8k │ Context: 6k │ │

│ └──────────────────┴──────────────────┴──────────────────┘ │

└───────────────────────────────────────────────────────────────┘

│ │ │

└──────────────────┼──────────────────┘

▼

Each agent focused on its own problemOpen up multiple Claude Code agents across different VS Code panels. Three to four are probably best to run at once—I'm on a Max plan which allows concurrent agents. If you're Boris Cherny and you work at Anthropic with an unlimited agent and context budget, you can probably run 10 at once. If you want to see what he does, check out this breakdown of his workflow.

Organize your work by having each agent panel represent a specific problem. That could be front end, back end, or mixed. It could be a bunch of different components on the front end, but it should focus on one project—the website, for instance—and different aspects of it. Each agent maintains its own context window focused on its specific task rather than loading the entire codebase into every conversation.

3. Establish a "World Class" Design Persona

╔═══════════════════════════════╗

║ "World Class UI/UX Designer" ║

╚═══════════════════════════════╝

│

┌─────────────────────┼─────────────────────┐

▼ ▼ ▼

┌───────────────┐ ┌───────────────┐ ┌───────────────┐

│ Sub-Agent A │ │ Sub-Agent B │ │ Sub-Agent C │

│ Component │ │ Layout │ │ Animation │

└───────────────┘ └───────────────┘ └───────────────┘

│ │ │

└─────────────────────┼─────────────────────┘

▼

┌─────────────────────────┐

│ Consistent Standards │

│ Grid · Color · Motion │

└─────────────────────────┘Ask the agent to create a design kit with library information, descriptions, and quality standards. Give it a behavior or a role—for example, a "World Class UI/UX Designer."

You do this because you want it to adopt that persona throughout the project. You can tell sub-agents to create components or materials utilizing this basis so that the design standards are consistent across the entire application.

Level One: A prompt describing what you want (usually folded/hidden).

Level Two: Giving it a role, asking it for the design standards and common practices of that role, and then utilizing that as a system prompt.

Level Three: Including screenshots, reference images, code snippets from design libraries, or links to components you want to emulate. Show the agent what "good" looks like—paste in a Radix UI component example, link to a Tailwind template, or screenshot a design you admire. The more concrete the reference, the closer the output.

For example, ask: "What are the first common techniques in design, and how can we incorporate that? How do we use UI and UX design for obvious visual harmony, grid systems, etc.?"

Once established, the agent will always use them. This guarantees consistency.

It's always better to do this at the beginning of a project. If you try to establish design standards at the end, the AI will just do reskins—surface-level changes to colors, fonts, and spacing. If you want structural differences in how components are laid out, how information flows, or how interactions work, you have to define that at the start of any design work.

4. Isolate Scope and Plan

┌─────────────────────────────────────────────────────┐

│ "Build me an e-commerce platform" │

└─────────────────────────────────────────────────────┘

│

Agent interviews you

│

▼

┌───────────────────────────────────────┐

│ Isolated: "Product catalog" │

└───────────────────────────────────────┘

│

▼

┌───────────────────────────┐

│ Task 1: Product model │

│ Task 2: List view │

│ Task 3: Detail page │

└───────────────────────────┘

│

▼

┌───────────────┐

│ Execute │

│ one by one │

└───────────────┘Don't do too much at once. Ask the agent to plan and interview you to isolate your problem and scope.

What you don't want to do is tell it to execute all of those things at once—unless you tell it to make the plan, spawn sub-agents, and then ask each of those agents to adhere to the design standard and act individually. This ensures the output is consistently good across all tasks.

5. Spawn Sub-Agents

┌─────────────────────┐

│ Primary Agent │

│ (Coordinator) │

└──────────┬──────────┘

│

"Spawn agents for each task"

│

┌─────────────────────┼─────────────────────┐

▼ ▼ ▼

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ Sub-Agent #1 │ │ Sub-Agent #2 │ │ Sub-Agent #3 │

│ agents/ui.md │ │ agents/api.md │ │ agents/test.md │

└─────────────────┘ └─────────────────┘ └─────────────────┘

│ │ │

▼ ▼ ▼

UI Components API Routes Test SuiteWe're going to see Agent Swarms become mainstream in 2026. Get ahead of this by telling your AI to spawn sub-agents for parallel tasks.

The trick is to have different agent configuration files prepared for specific kinds of work. Keep an agents/ directory with files like ui.md, api.md, testing.md—each with tailored instructions for that domain. When your primary agent spawns a sub-agent, point it to the relevant config file so it inherits the right context and constraints.

This turns one agent into an orchestrator that delegates to specialists. Instead of one agent context-switching between UI work, API logic, and tests, you get focused agents that do one thing well.

6. The Rule of Threes

Error: "Cannot find module 'xyz'"

Attempt 1: ✗ ─────────────────────────────────► Retry

Attempt 2: ✗ ─────────────────────────────────► Retry

Attempt 3: ✗ ─────────────────────────────────► STOP

│

┌───────────────────────────────┘

▼

┌─────────────────────────────────┐

│ Analyze root cause │

│ Generate permanent fix │

│ Log to devlog.md → "Learnings" │

└─────────────────────────────────┘

│

▼

┌─────────────────────────────────┐

│ Future sessions retrieve this │

│ context automatically │

└─────────────────────────────────┘Implement a "Rule of Threes." This is a logic rule: if the agent has an error or comes across the same problem three times, it automatically creates a fix for itself and notes it as a learning in the devlog. This way, if something happens again, the system is almost automatically improving itself.

Here is an example of a skill prompt to handle this coding style:

# Skill: Rule of Three Error Handling

When executing code or running tests, maintain a counter for specific error types encountered during a single session.

If the same error occurs 3 times:

1. Stop execution immediately.

2. Analyze the root cause rather than attempting a fourth retry.

3. Generate a permanent fix (e.g., updating a dependency, refactoring the function, or adjusting the environment config).

4. Log the incident in devlog.md under a "Learnings" section, detailing the error, the cause, and the fix, so this context is retrieved in future sessions.7. Skills, Docs, and Refactoring Loops

┌──────────────┐

│ Generate │

│ Code │

└──────┬───────┘

│

▼

┌──────────────┐ ┌─────────────────┐

│ Document │◄──────►│ agents.md │

│ Changes │ │ devlog.md │

└──────┬───────┘ │ learnings.md │

│ └─────────────────┘

▼

┌──────────────┐ ┌─────────────────┐

│ Refactor │◄──────►│ skills.sh │

│ & Clean │ │ Best practices│

└──────┬───────┘ └─────────────────┘

│

└────────────────────┐

▼

(back to Generate)Use skills. If you give your agent skills, it can reference them when problems arise. For example, Vercel released a React and Next.js best practices skill that agents can use to follow performance patterns and avoid common pitfalls.

That said, Vercel's own research shows that a well-crafted agents.md file actually outperforms skills in their agent evaluations. So while skills.sh is great, a really solid agent.md file will go a long way. You should always initialize a project with that.

You also want a documentation log, a learning log, and to ask your agent to refactor the codebase often.

The point of the devlog is to have time-stamped context historically throughout the entire development of the project. The AI can reference what happened, what it learned, and what mistakes were made. You can always tell it to add to the devlog—after a session, after fixing a tricky bug, after making an architectural decision. This becomes institutional memory that persists across sessions and agents.

When you start working on a new part of the project, tell the agent to check the devlog first. Point it to what worked: "Last time we did X this way and it was successful—adhere to that approach." This reinforces good patterns and reduces repeated mistakes.

As of February 3rd, 2026, I use OpenAI's Codex 5.2 to review my whole codebase, refactor things, fix hard bugs, and make plans. Then, I use Claude Code and Kimi k2.5 to actually do the work. I've noticed that overall, the more I document, the better it gets. And the more "hands-off" my agent becomes, the more accurate it becomes.

Regarding refactoring: I spend a lot of time generating code, but I will also ask my agent to do a clean-up sweep. I specifically ask it to look for unused imports, conflicts, contradictions, and dead code.

Here is a skill you can use to detect that:

# Skill: Code Hygiene & Conflict Detection

Run a static analysis scan on the target directory. Identify and list:

- Unused imports and declared but undefined variables.

- Dead code blocks (functions or components not referenced anywhere).

- Logical contradictions (e.g., config files checking for opposite environments simultaneously).

For every issue found, propose a refactor that removes the bloat without altering the core application logic.8. Optimizing Code for Agents (Not Just Humans)

BEFORE AFTER

══════ ═════

src/ src/

└── app.js (3000 lines) ├── components/

│ │ ├── NAV_MAP.md ← Agent reads this

│ Agent loads │ ├── Header.js

│ entire file │ ├── Footer.js

│ │ └── Sidebar.js

▼ ├── hooks/

┌─────────────────┐ │ ├── NAV_MAP.md

│ Context: 45k │ │ ├── useAuth.js

│ tokens │ │ └── useData.js

│ │ └── utils/

│ Agent confused │ ├── NAV_MAP.md

└─────────────────┘ └── helpers.js

Context: 8k tokens per task

Agent navigates efficientlyBeyond standard refactoring, ask the agent to think about how to make the codebase better for an agent to navigate. If it's going to be searching through this and I'm not helping it get better, it will create a lot of confusion. Agents are extremely good at creating code documentation that helps other agents read the codebase.

This involves refactoring big files into smaller ones and asking architectural questions like: "What would a senior engineer do? What would a high-level production team do to build out this project? How would they manage it?"

If you have huge files—thousands of lines of code—you're using up a large context window. But if you splinter those into small directories with individual components and place a Navigation Map at the top of every directory, the agent gets really good at navigating while lowering your context window spend.

9. Use Technologies Agents Know Well

Agent Training Data Coverage

════════════════════════════

Next.js ████████████████████████░░ High coverage, recent docs

Ruby on Rails████████████████████████░░ High coverage, mature

Convex ██████████████░░░░░░░░░░░░ Good coverage, integrated

SvelteKit ██████████████░░░░░░░░░░░░ Growing coverage

New Framework███░░░░░░░░░░░░░░░░░░░░░░░ Limited, sparse docs

┌──────────────────────────────────────────────────────┐

│ Knowledge Cutoff: May 2025 │

│ ─────────────────────────────────────── │

│ Agent may reference outdated patterns. │

│ Force current docs with direct links + versions. │

└──────────────────────────────────────────────────────┘You should use technologies and tools that agents are well-versed in based on their training data and recency. For example, Ruby on Rails and Next.js have extensive documentation and community resources that agents have been trained on. Integrated platforms like Convex, where your database lives alongside your code, also work well because the entire environment is cohesive and well-documented.

I'm biased because I use Next.js with Convex and Resend—an integrated environment where everything works together. But in general, I think it's about finding a balance between popularity and recency.

Consider training data cutoffs. Agents will often reference 2024 or 2025 information based on their knowledge cutoff dates. When they search for documentation, they might pull outdated information. You should force agents to use current documentation by providing direct links, specifying version numbers, or correcting them when they reference old patterns. Keep your tooling consolidated in one place so the agent isn't jumping between disparate ecosystems with varying levels of documentation quality.

Final Thoughts

┌─────────────────────────────────────────────────────────┐

│ │

│ Claude Code ◄──► Codex 5.2 ◄──► Kimi k2.5 ◄──► ... │

│ │

│ Try them all. Benchmarks mean little. │

│ What matters is how it feels. │

│ │

└─────────────────────────────────────────────────────────┘These are the learnings I've accumulated from coding with agents as someone without a traditional coding background.

My final advice? Try them all.

You should try Open Code. You should try Z.ai. You should try the small GLM model. You should try Gemini Pre 3 Pro Preview. Experiment with making your own harness. Try the open-source stuff. Try the private stuff. Just see how it works, because benchmarks mean very little.

What matters is how it feels to utilize the agent in your codebase. If you're well-practiced with agents, you've built a reliable system, and you've initiated an agent.md or claude.md file, it's going to go way better.

One more thing: push back against the agent. If something feels wrong, say so. If you want another pass, ask for it. Don't just accept the first output. It's faster to course-correct early than to let the agent go down a wrong path for 20 minutes and end up in circles. Your intuition matters—use it.

As a final note, sometimes I'm skeptical about my code being trained on, but if AGI happens, I don't think my code will be valuable anyway. These are the lessons from 2 years of Vibe Coding.

Get Started

Want a template to build from? Check out the Starter Template: agents.md—it's a reference implementation of these strategies that you can customize for your project.

Or better yet: copy this entire article, paste it into a new conversation with your agent, and say "Help me design and implement a CLAUDE.md for my project based on these strategies." The agent will ask about your stack, your workflow, and build something tailored to you.

Want to dive deeper? Check out these related posts: